NLP & DL

- 특수 목적이 아닌, 범용적(일반적)으로 쓰일 Word Embedding을 만든다.

-

embedding의 방법

- 따라서 문장 속 단어의 맥락(의미)를 파악할 줄 안다.

- 즉, semantic 방법을 사용한다.

-

참고:

- 분포 가설:

- 같은 문맥의 단어, 즉 비슷한 위치에 나오는 단어는 비슷한 의미를 가진다. 따라서 어떤 글에서 비슷한 위치에 존재하는 단어는 단어 간의 유사도가 높다고 판단.

빈도 기반의 문서 수치화 학습 기반의 문서 수치화 카운트 기반 방법 예측 방법 빠르다 단어들의 복잡한 특징까지 잘 파악할 수 있다. Bag of word(BOW), TF-IDF 활용한 SVD 등 Word Embedding / Word2Vec

Word2Vec

| Word2Vec | Word Embedding |

|---|---|

| 특정 목적이 아닌 범용적인 목적으로 사용된다. - 방대한 양의 아무문서나 코퍼스를 학습하여 단어들이 어떤 관계를 갖도록 벡터화(수치화)하는 기술이다. - 따라서 단어들의 의미가 범용적이다. |

classification 등의 특정 목적을 달성하기 위해 그때마다 학습하는 방식. - 단어들이 특정 목적에 맞게 벡터화된다. |

| 사후적으로 결정되는 Word Embedding 과 달리 사전에 학습하여 단어의 맥락을 참조한 벡터화를 진행한다. - 분포가설 이론 사용: ''주변 단어들을 참조하는 등 단어들의 분포를 통해 해당 단어의 의미를 파악한다'', 란 뜻단어의 - 주변 단어(맥락:context)를 참조하여 해당 단어를 수치화한다. 그러면 해당 단어는 인접 단어들과 관계가 맺어지고 인접 단어들 간에는 단어 벡터의 유사도가 높다. |

Word Embedding 벡터는 사후적으로 결정되고, 특정 목적의 용도에 한정된다. |

방법: continuous back of word (CBOW), Skip-gram |

-

대표적인 Word2Vec >

CBOW Skip-Gram 문장을 단어로 전처리한다. 문장을 단어로 전처리한다. 수치화하고 싶은 단어가 output 되도록

네트워크 구성주변 단어를

* 순서:

input

ex: (input) alic, bit 등 여러 개 ... →

hidden layer →

(output) hurt

따라서: hidden layer = 중간출력.CBOW 를 거꾸로 한 것.

- input 1개 ,

- output 여러 개

* 순서:

(input) hurt →

hidden layer →

(output) alic, bit 등 여러 개 ...

- AE 배울 때, 모델 전체 학습 시킨 후,

autoencoding 부문만 따로 빼서

목적에 맞게 돌린 것처럼

Skip-Gram도 그렇게 진행함.즉, 여러 개로 한 개 예측 즉, 한 개로 여러 개 예측

-

원리 >

예를 들어

- (input) hurt를 one-hot encoding해서 hurt의 위치(index) 를 파악한 후,

- (output) alic를 넣었을 때 hurt의 위치(index)를 찾도록 함

- 예측 시, 더 편리한 건 Skip-Gram.

- 단어 하나만 넣으면 output이 나오므로

- 단점 >

-

동음이의어를 제대로 파악하지 못한다.

- 실제 word2vec의 위치를 계산할 때 가까운 거리에 있는 값들의 평균으로 계산하기 때문에

- 해결방법:

ELMo - embedding 할 때, 문맥에 따라 가변적으로 vector를 만든다. 즉, 맥락화된 단어 임베딩

-

출력층을 'softmax'를 사용해서 계산량이 많다.

- softmax를 사용하는 이유: one-hot 인코딩 위해서

- 그런데 softmax를 사용하기 위해선 전체 단어를 0~1사이의 값으로 표현하기 위해 전부 계산을 진행하는데, 이때 전체 단어가 3만 개 등지가 넘어가는 정도로 큰 vocab일 땐 계산량이 많다.

-

해결 방법:

Skip-Gram Negative Sampling(SGNS)- SGNS는 sigmoid 사용

-

OOV(Out Of Vocbulary)

-

해결방법:

FastText- 빈도수가 적은 단어에 대해서도 OOV 문제에 해결 가능성이 높다

-

-

문서 전체에 대해선 고려 못한다.

-

해결방법:

GloVe- 빈도기반(TF-IDF) + 학습기반(Embedding) 방법 혼용

- TF-IDF: 문서 전체에 대한 통계를 사용하지만, 단어별 의미는 고려하지 못한다는 단점과 Word2Vec: 주변 단어만을 사용하기 때문에 문서 전체에 대해서는 고려하지 못한다.

-

-

CODE

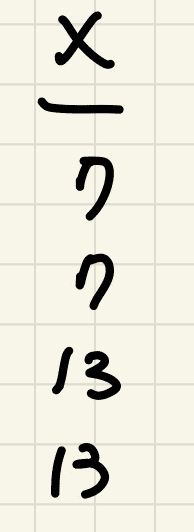

네트워크에는 center값 넣음

- x값인 7을 input 했을 때 output이 y값으로 8이 나올 때의 네트워크다.

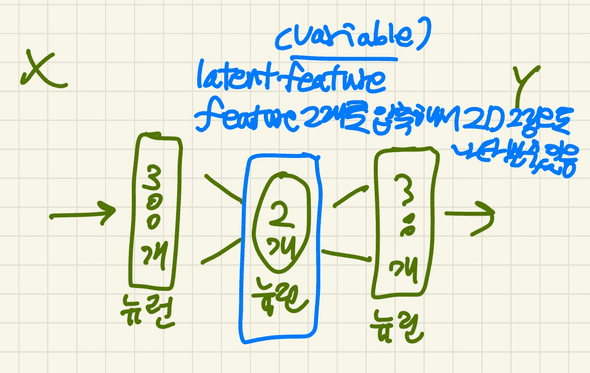

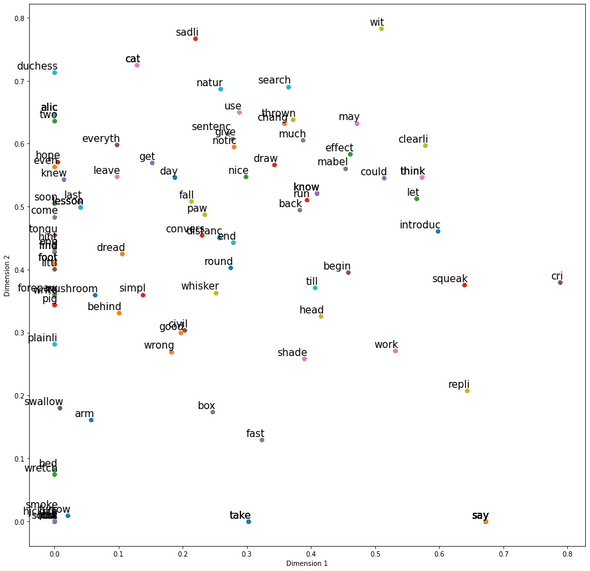

- 2개 뉴런으로 줄였을 때의 latent layer를 전체 학습 후 따로 빼내고,

- 이때 나온 x좌표와 y좌표로 2D상의 plt에 그림으로 나타내면, 맥락 상 가까운 의미를 가진 단어들끼리 뭉쳐져 있음을 확인할 수 있다.

code

STEP 1

-

패키지 불러오기

from sklearn.model_selection import train_test_split from sklearn.preprocessing import OneHotEncoder import matplotlib.pyplot as plt import nltk import numpy as np import pandas as pd from nltk.corpus import stopwords from nltk.stem import WordNetLemmatizer import string from nltk import pos_tag from nltk.stem import PorterStemmer import collections from tensorflow.keras.layers import Input, Dense, Dropout from tensorflow.keras.models import Model

-

전처리

def preprocessing(text): # 한 line(sentence)가 입력됨 # step1. 특문 제거 text2 = "".join([" " if ch in string.punctuation else ch for ch in text]) # for ch in text: 한 sentence에서 하나의 character를 보고, string.punctuation:[!@#$% 등]을 공백처리('')=제거 함 tokens = nltk.word_tokenize(text2) tokens = [word.lower() for word in tokens] # 위 제거에서 살아남은 것들만 .lower() = 소문자로 바꿔서 word에 넣어줌 # step2. 불용어 처리(제거) stopwds = stopwords.words('english') tokens = [token for token in tokens if token not in stopwds] # stopword에 없는 것만 token 변수에 저장 # step3. 단어의 철자가 3개 이상인 것만 저장 tokens = [word for word in tokens if len(word)>=3] # step4. stemmer: 어간(prefix) 추출(어미(surffix) 제거) ex: goes -> go / going -> go stemmer = PorterStemmer() tokens = [stemmer.stem(word) for word in tokens] # step5. 단어의 품사 태깅(tagging) tagged_corpus = pos_tag(tokens) # ex: (alic, NNP), (love, VB) Noun_tags = ['NN','NNP','NNPS','NNS'] Verb_tags = ['VB','VBD','VBG','VBN','VBP','VBZ'] # 단어의 원형(표제어,Lemma)을 표시한다 ## 표제어(Lemma)는 한글로는 '표제어' 또는 '기본 사전형 단어' 정도의 의미. 동사와 형용사의 활용형 (surfacial form) 을 분석 ## 참고: https://wikidocs.net/21707 ## 걍 형용사/동사를 사전형 단어로 만들었다 생각하기.... # ex: belives -> (stemmer)believe(믿다) // belives -> (lemmatizer)belief(믿음) # (cooking, N) -> cooking / (cooking, V) -> cook ## 한국어 예시: """ lemmatize 함수를 쉽게 만들 수 있습니다. 띄어쓰기가 지켜진 단어가 입력되었을 때 Komoran 을 이용하여 형태소 분석을 한 뒤, VV 나 VA 태그를 가진 단어에 '-다'를 붙입니다. 단, '쉬고싶다' 와 같은 복합 용언도 '쉬다' 로 복원됩니다. 출처: https://lovit.github.io/nlp/2019/01/22/trained_kor_lemmatizer/ """ lemmatizer = WordNetLemmatizer() # 품사에 따라 단어의 lemma가 달라진다 # (cooking, N) -> cooking / (cooking, V) -> cook def prat_lemmatize(token,tag): if tag in Noun_tags: return lemmatizer.lemmatize(token,'n') elif tag in Verb_tags: return lemmatizer.lemmatize(token,'v') else: return lemmatizer.lemmatize(token,'n') pre_proc_text = " ".join([prat_lemmatize(token,tag) for token,tag in tagged_corpus]) return pre_proc_text

-

소설 alice in wonderland를 읽어온다.

lines = [] fin = open("./dataset/alice_in_wonderland.txt", "r") for line in fin: if len(line) == 0: continue # 소설 txt내 엔터 없애기 lines.append(preprocessing(line)) fin.close()

-

단어들이 사용된 횟수를 카운트 한다.

counter = collections.Counter() for line in lines: for word in nltk.word_tokenize(line): counter[word.lower()] += 1

-

사전을 구축한다.

- 가장 많이 사용된 단어를 1번으로 시작해서 번호를 부여한다.

word2idx = {w:(i+1) for i,(w,_) in enumerate(counter.most_common())} # ex: [(apple:50), (cat: 43), ...] idx2word = {v:k for k,v in word2idx.items()} # ex: [(50: apple), (43: cat), ...]

-

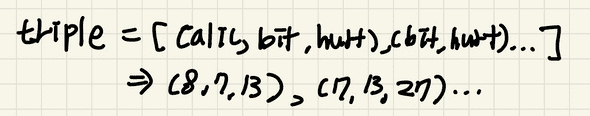

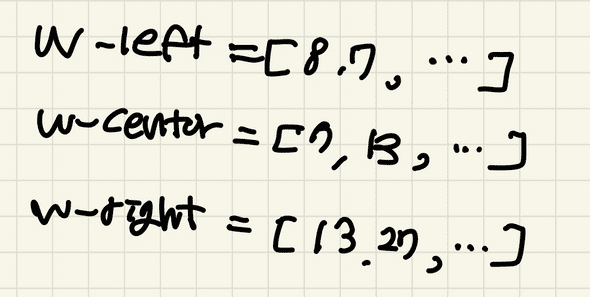

Trigram으로 학습 데이터를 생성한다.

xs = [] # 입력 데이터 ys = [] # 출력 데이터 for line in lines: # 사전에 부여된 번호로 단어들을 표시한다. ## 각 문장을 tokenize해서 소문자로 바꾸고 word2idx로 변환 embedding = [word2idx[w.lower()] for w in nltk.word_tokenize(line)] # word2idx: value값인 index번호가 나옴 # Trigram으로 주변 단어들을 묶는다. ## .trigrams(=3)만큼 끊어서 연속된 문장으로 묶기 ex: triples = [(1,2,3), (3,5,3), ...] triples = list(nltk.trigrams(embedding)) # 왼쪽 단어, 중간 단어, 오른쪽 단어로 분리한다. w_lefts = [x[0] for x in triples] # [1, 2, ...8] w_centers = [x[1] for x in triples] # [2, 8, ...13] w_rights = [x[2] for x in triples] # [8, 13, ...7] # 입력 (xs) 출력 (xy) # --------- ----------- # 1. 중간 단어 --> 왼쪽 단어 # 2. 중간 단어 --> 오른쪽 단어 xs.extend(w_centers) ys.extend(w_lefts) xs.extend(w_centers) ys.extend(w_rights)

-

학습 데이터를 one-hot 형태로 바꾸고, 학습용과 시험용으로 분리한다.

vocab_size = len(word2idx) + 1 # 사전의 크기 # vocab_size = 1787 # + 1 해줘야 밑에 ohe 할 때, vocab 끝까지 전부를 ohe 할 수 있음 ohe = OneHotEncoder(categories = [range(vocab_size)]) # ohe = OneHotEncoder(categories=[range(0, 1787)]) X = ohe.fit_transform(np.array(xs).reshape(-1, 1)).todense() # .todense = .toarray()와 동일함: 결과를 배열 형태로 변환 Y = ohe.fit_transform(np.array(ys).reshape(-1, 1)).todense()X.shape = (13868, 1787) / y.shape = (13868, 1787)

STEP 2. 학습용/시험용 data로 분리

Xtrain, Xtest, Ytrain, Ytest, xstr, xsts = train_test_split(X, Y, xs, test_size=0.2)

# xs를 쓴 이유? => 뒤에서 가까운 단어끼리 그림(plt) 그릴 때 쓰려고shape 참고 >

np.array(xs).shape

Out[19]: (13868,)np.array(xstr).shape

Out[20]: (11094,)np.array(xsts).shape

Out[21]: (2774,)np.array(Xtrain).shape

Out[22]: (11094, 1787)np.array(Xtest).shape

Out[23]: (2774, 1787)np.array(Ytrain).shape

Out[24]: (11094, 1787)np.array(Ytest).shape

Out[25]: (2774, 1787)

-

딥러닝 모델을 생성한다.

BATCH_SIZE = 128 NUM_EPOCHS = 20 input_layer = Input(shape = (Xtrain.shape[1],), name="input") # shape = batch(None) 빼고 y feature의 shape만 넣어주면 됨 first_layer = Dense(300, activation='relu', name = "first")(input_layer) first_dropout = Dropout(0.5, name="firstdout")(first_layer) second_layer = Dense(2, activation='relu', name="second")(first_dropout) third_layer = Dense(300,activation='relu', name="third")(second_layer) third_dropout = Dropout(0.5,name="thirdout")(third_layer) fourth_layer = Dense(Ytrain.shape[1], activation='softmax', name = "fourth")(third_dropout) # Ytrain.shape[1] = Xtrain의 shape과 동일해야 함 # activation='softmax': one-hot이 출력되기 때문에 softmax여야 함 model = Model(input_layer, fourth_layer) model.compile(optimizer = "rmsprop", loss="categorical_crossentropy")loss="

categorical_crossentropy": 만약 one-hot이 아니라, 숫자(vocab의 index)가 출력된다면, loss="sparse_categorical_crossentropy"

-

학습

hist = model.fit(Xtrain, Ytrain, batch_size=BATCH_SIZE, epochs=NUM_EPOCHS, validation_data = (Xtest, Ytest))

-

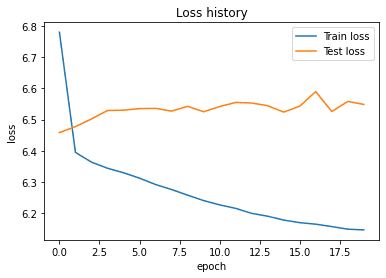

Loss history를 그린다

plt.plot(hist.history['loss'], label='Train loss') plt.plot(hist.history['val_loss'], label = 'Test loss') plt.legend() plt.title("Loss history") plt.xlabel("epoch") plt.ylabel("loss") plt.show()

STEP3. 단어들끼리의 거리를 그림으로 나타내는 code

-

Word2Vec 수치 확인

# Extracting Encoder section of the Model for prediction of latent variables # 학습이 완료된 후 중간(hidden layer)의 결과 확인: = Word2Vec layer확인. # (word2vec: word를 vec(수치)로 표현. 저번 수업에서 w의 값을 '.get_weight()'해서 확인했을 때의 값이 나올 듯) encoder = Model(input_layer, second_layer) # Predicting latent variables with extracted Encoder model reduced_X = encoder.predict(Xtest) # Xtest 넣은 것처럼 임의의 단어를 입력하면 reduced_X = 해당 단어의 Word2Vec형태로 출력됨 # 시험 데이터의 단어들에 대한 2차원 latent feature(word2vec 만드는 layer)인 reduced_X를 데이터 프레임(표)으로 정리한다. final_pdframe = pd.DataFrame(reduced _X) final_pdframe.columns = ["xaxis","yaxis"] final_pdframe["word_indx"] = xsts # test 용이므로 train/test split 할 때 같이 나눴던 xstr, xsts 중 y값인 xsts 사용 final_pdframe["word"] = final_pdframe["word_indx"].map(idx2word) # index를 word로 변환함 # 데이터 프레임에서 100개를 샘플링한다. rows = final_pdframe.sample(n = 100) labels = list(rows["word"]) xvals = list(rows["xaxis"]) yvals = list(rows["yaxis"])[final_pdframe] > Out[26]: xaxis yaxis word_indx word 0 0.301799 0.000000 25 take 1 0.590210 0.810300 468 pick 2 0.672298 0.000000 1 say 3 0.408792 0.520896 9 know 4 0.387678 0.605502 30 much ... ... ... ... 2769 1.309759 0.851837 27 mock 2770 0.000000 0.423953 622 master 2771 0.196061 0.299570 83 good 2772 0.000000 0.024289 1516 deserv 2773 0.470771 0.550808 497 plan

[2774 rows x 4 columns]

-

샘플링된 100개 단어를 2차원 공간상에 배치

- 거리가 가까운 단어들은 서로 관련이 높은 것

plt.figure(figsize=(15, 15)) for i, label in enumerate(labels): x = xvals[i] y = yvals[i] plt.scatter(x, y) plt.annotate(label,xy=(x, y), xytext=(5, 2), textcoords='offset points', ha='right', va='bottom', fontsize=15) plt.xlabel("Dimension 1") plt.ylabel("Dimension 2") plt.show()

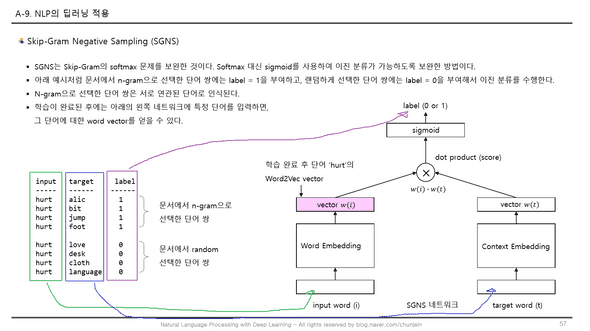

Skip-Gram Negative Sampling(SGNS)

-

Skip-Gram의 softmax를 활용했기 때문에 계산량이 많다는 단점을 sigmoid 사용하여 보완함

- 값이 0~1사이 값이 아니라, 0 아니면 1인 이진 분류로 나옴

- 따라서 계산량 감소

-

방법:

Skip-Gram Negative Sampling:- n-gram으로 선택한 단어 쌍에는 label = 1을 부여하고, 랜덤하게 선택한 단어 쌍에는 label = 0을 부여해서 이진 분류

N-gram으로 선택한 단어 쌍은 서로 연관된 단어로 인식됨

- 2개의 input에 각각의 input, target 값 입력

- 각각 vector 값 계산

- 두 값 concat(or dot or add)

- sigmoid 계산하여

- label(0 or 1) 값이 나오게

- 학습이 완료된 후에는 아래의 왼쪽 네트워크에 특정 단어를 입력하면, 그 단어에 대한 word vector를 얻을 수 있다.

skip-gram과 skip-Gram Negative Sampling 차이

Skip-Gram |

Skip-Gram Negative Sampling |

|---|---|

| input 1개 input: input data output: target data |

input 2개 input[1] : input data input[2] : target data output: label |

| label 無 | label 有: 1 or 0으로 이루어져 있음(이진분류) n-gram으로 선택한 단어 쌍에는 label = 1 랜덤하게 선택한 단어 쌍에는 label = 0 |

| 출력층: softmax 사용하여 0~1사이의 값 loss='categorical_crossentropy' 따라서 argmax() 함 |

출력층: sigmoid 사용하여 이진분류 loss="binary_crossentropy" |

| 거리 연산(cosine 등)을 하지 않는다. latent layer에서 벡터 연산을 통해 나온 x,y 좌표로 그림을 그리던가 해서 맥락 상 비슷한 의미를 가진 단어들을 찾아낼 수 있다. |

거리 연산을 할 수 있다. 두 개의 input 값에서 나온 vector 값을 하나로 합칠 때 dot 함수를 쓰면 거리 연산을 하는 것과 같다. 이때 cosine 거리 함수를 쓸 수도 있다. 그런데, concate 이나 add 함수를 쓰면 거리 계산을 못한다. |

SGNS의 Embedding 활용

- raw data 전처리

- Trigram으로 학습할 data 생성

-

긍정(1), 부정(0) data 생성:SGNS용 학습 데이터를 생성

rand_word = np.random.randint(1, len(word2idx), len(xs)) x_pos = np.vstack([xs, ys]).T x_neg = np.vstack([xs, rand_word]).T y_pos = np.ones(x_pos.shape[0]).reshape(-1,1) y_neg = np.zeros(x_neg.shape[0]).reshape(-1,1)x_total = np.vstack([x_pos, x_neg]) y_total = np.vstack([y_pos, y_neg]) X = np.hstack([x_total, y_total]) np.random.shuffle(X) - SGNS 모델 빌드

embedding,dot,reshape,sigmoid,binary_crossentropy등- SGNS 모델 학습

- SGNS의 Embedding 모델 만들고, 그 모델의 가중치(w)만 따로 빼서 저장함

- 여기까지가 범용 목적의 SGNS의 Embedding을 만든 절차.

- 아래부턴 불특정 word data에 SGNS로 학습한 Embedding 기법을 적용해보는 것임

- raw data로 train/test data split

- 활용할 CNN 모델 빌드(complie 까지)

- CNN 모델 학습 전에 SGNS의 Embedding의 가중치(w) load(불러오기)

- CNN 모델 fit 할 때, SKNS에서 학습한 W를 적용:

model.layers[1].set_weights(We) - plt 그리거나 성능 확인

-

성능 확인:

y_pred = model.predict(x_test) y_pred = np.where(y_pred > 0.5, 1, 0) print ("Test accuracy:", accuracy_score(y_test, y_pred))

-

Google's trained Word2Vec model:

- SGNS 방식

- Pre-trained 방식

- 문서 → Vector화(수치화) → 일반 DL로 바로 학습 가능

응용 및 발전에 있어 궁금한 점

-

Word2Vec의 code 中 빈도순으로 index를 부여했었다.

word2idx = {w:(i+1) for i,(w,_) in enumerate(counter.most_common())} -

그런데 바로 다음, trigram으로 학습 데이터를 구성할 땐

embedding = [word2idx[w.lower()] for w in nltk.word_tokenize(line)]정말 단순히 word2idx를 단어 찾는 용도로만 썼다.

triples = list(nltk.trigrams(embedding)) -

만약, 위 embedding을 sort 해서 idx number를 재정렬하거나, pre-processing 단계에 embedding을 넣는다.

그리고 CNN, LSTM 모델을 돌린다면, 빈도가 비슷한 단어들끼리 묶일 것이다.

그럼 단어의 중요도 순으로 거리를 측정할 텐데, 그럼 index number 1인 단어를 찾고, 그 단어와 다른 단어 사이의 거리를 계산해 특정 점수 구간 이외의 것들을 따로 모아둔다면?

-

예를 들어 가장 많이 나온 단어 happy와 다른 단어들 사이의 거리를 측정하고, 0.5 이하의 코사인 유사도인 것들을 따로 빼서 Another_vocab에 모아둔다. 기존 vocab의 것들은 해당 Document, Sentence의 핵심 keyword 들일 거고, 주인공들이겠지(경우에 따라선 필요가 없는 단어일수도 있겠다.)

이렇게 다른 문서도 이러한 process를 진행해 Anothervocab2를 만든다.

그 다음 Anothervocab와 Anothervocab_2의 cosine 유사도를 다시 구해 axis로 통합했을 때의 유사도는 해당 문서 사이의 겹치는 단어들 수치겠지.

- 이걸 100년치 신문 data에 년별로 적용한다면, 1988년 신문과 2020년 신문이 유사도가 높을 때, 1988년 및 2020년의 국민들의 관심사가 일치한다고 볼 수 있지 않을까?

-

참고:

아마추어 퀀트, blog.naver.com/chunjein

코드 출처: 크리슈나 바브사 외. 2019.01.31. 자연어 처리 쿡북 with 파이썬 [파이썬으로 NLP를 구현하는 60여 가지 레시피]. 에이콘